Semantic Pyramid for Image Generation

Supplementary Material

* Please allow a few seconds for the page to fully load

* Click under each video to play it.

- Image Interpolation in Latent Space

- Random Image Samples from Increasing Semantic Levels (Section 4)

- Image Generation from Line Drawings, Paintings and Grayscale Images (Section 4.2)

- Image re-labeling (Section 4.2)

- Semantic Image Composition (Section 4.2)

- Comparison with GAN-based Feature Inversion Method

- Sample Images from our User Study (Section 4.1)

Image Interpolation in the Latent Space

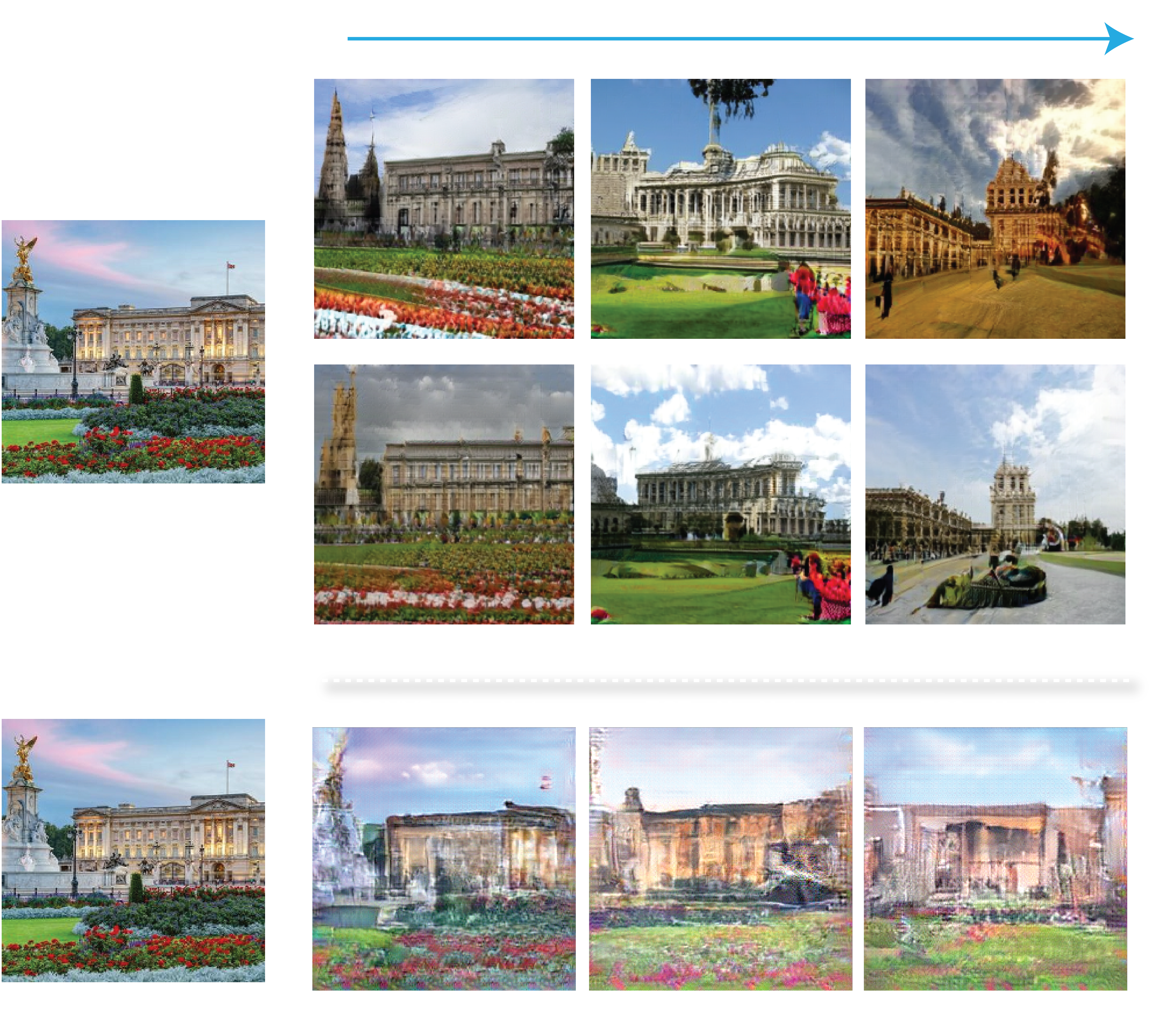

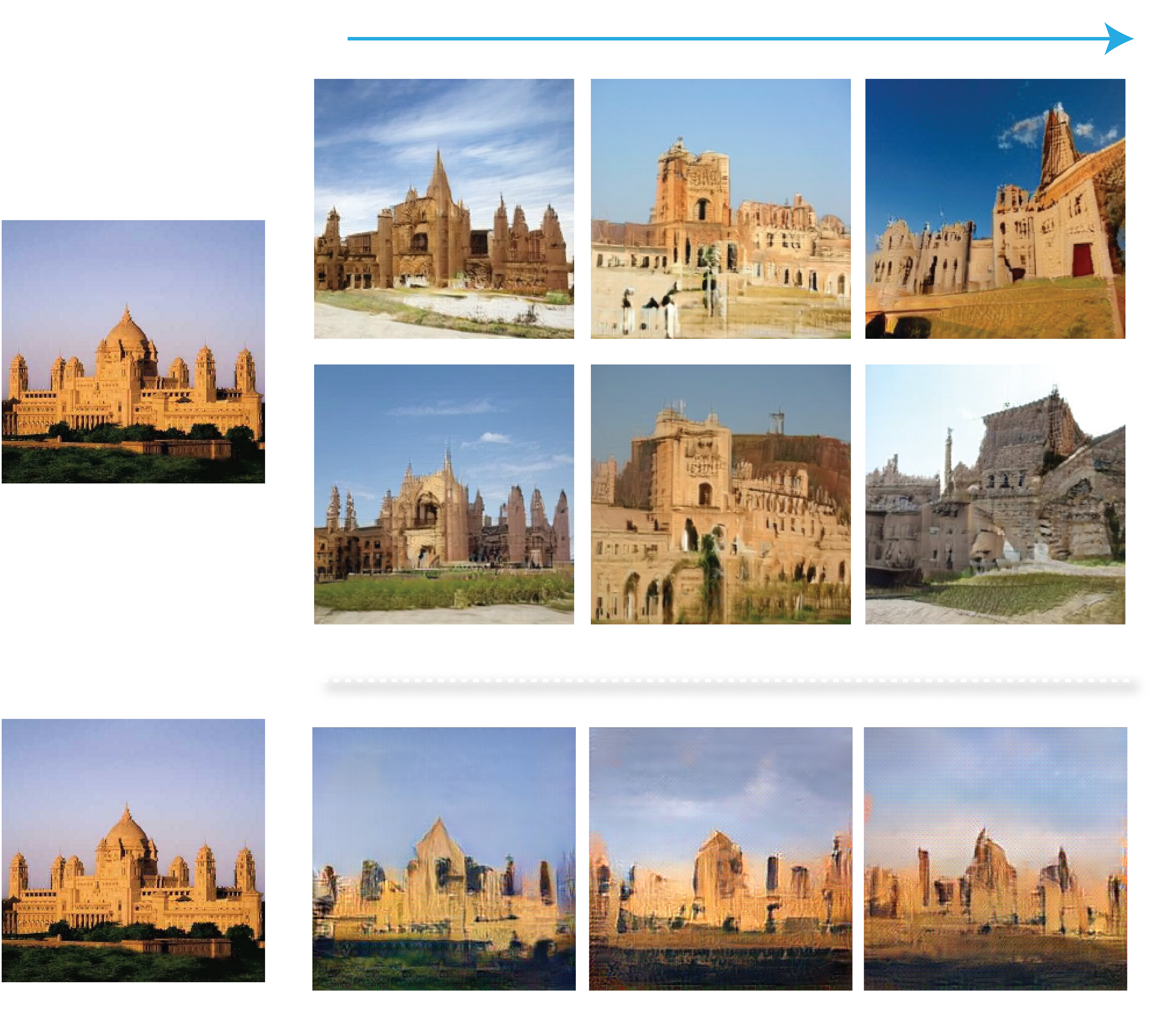

Geneation from increasing semantic pyramid levels . We show image samples generated by our model when fed with features from increasing sematic levels (CONV4 to FC8). The image samples were generated by sampling 5 different input noise vectors ("z") and linearly interpolating between the sampled noise vectors in the latent space.

Note how the diversity of the generated images increases with the level of generation, while the fidelity to the original image decreases. For all generation levels, the semantic content of the original image is preserved.

|

|||||

Re-painting. The masked regions (marked in gray in the second column) are re-generated from CONV5 features (extracted from the original image on the left column), while the unmasked regions are preserverd (see Section 4.2 and Figure 6 in the paper). The video depicts our re-painting results when linearly interpolating between 5 different input noise vectors ("z").

Our model generates diverse region samples controlled by the semantic content of the original image, and blends them naturally with the unmasked regions.

|

|||

|

Random Image Samples from Increasing Semantic Levels (Section 4)

We show additional results of image samples generated by our model when fed with features from increasing semantic levels (CONV1 to FC8, exculding CONV2 and CONV3 due to their visual similarity to CONV1). This allows us to generate images with a controllable extent of semantic similarity to a input image. These results supplement Figure 1 and Figure 2 in the paper.

Image Generation from Line Drawings, Paintings and Grayscale Images (Section 4.2)

We show additional generation results from unnatural input images including line drawing, paintings and grayscale images (supplement Figure 5 in the paper). We used CONV5 features extracted from the input images for all these results. Our generated image samples convey realistic image properties that do not exist in the original input images, including texture, lighting and colors.

Image re-labeling (Section 4.2)

Additional Re-labeling results obtained by feeding to our model a new, modified class label, yet using the original mid-level features (supplement Figure 1 and Figure 8 in the paper). Different semantic properties in the generated image are adopted to match the new class label, while the dominant structures are preserved.

Semantic Image Composition (Section 4.2)

Additional compositing results (supplement Figure 7 in the paper). Features from a source image (extracted from a region or a crop in the source image) are blended with features of the target image. Our model generates realistic images where the blended regions adopt in texture, lighting and colors to fit their surrounding.

"

Comparison with GAN-based Feature Inversion Method

We show a comparison to "Generating Images with Perceptual Similarity Metrics based on Deep Network", Dosovitskiy and Brox, NIPS'16, which is a GAN-based feature inversion method. The key differences to ours is: (i) they train a different model for feature inversion from each level; we have a single unified model that is able to invert

features from any selected layer(s). (ii) their model is deterministic -- the output is a single image for a given input features; our model allows to sample from the distribution of possible images. (iii) they did not explore nor designed their model for image manipulation tasks.

We used the authors' original implementation (their models are built on top of AlexNet and trained on ImageNet).

Sample Images from our User Study (Section 4.1)

We show sample image pairs used in our AMT user study (Section 4.1 in the paper). For each generation level, we show 4 different "Real/Fake" examples.

CONV1:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

CONV2:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

CONV3:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

CONV4:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

CONV5:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

FC7:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|

FC8:

| (a) | Real | Fake | (b) | Real | Fake |

|---|---|---|---|---|---|

|

|

|

|

||

| (c) | Real | Fake | (d) | Real | Fake |

|

|

|

|